TL;DR: AI fails more from org design than model choice. Stop centralizing. Build AI-native 2-Pizza teams with single-threaded owners—AI, data, and product in one pod—so prototypes become products in 6–8 weeks, not 6 months. Your AI strategy is your org strategy.

After 16 years scaling teams at Amazon and Microsoft, then building Sparkry.AI from zero, I've learned a harsh truth: How you organize people matters more to your AI strategy's success than which models you choose.

Are you still centralizing AI teams and wondering why your demos never ship?

The Evidence Is Damning

McKinsey estimates organizations waste $200 billion annually on failed AI initiatives, with 74% of Fortune 500 companies retrofitting AI onto outdated org structures designed for 2019. These centralized teams create bottlenecks that kill velocity.

Meta just spent the summer offering $100M+ packages to poach AI researchers, yet their own internal reports show "spread-out AI teams often lead to duplicated efforts, which can delay innovation and reduce efficiency." Teams get formed and disbanded in weeks, researchers fight for credit, and senior leaders disagree on technical approaches.

The companies winning? Those building AI-native 2-Pizza Teams with single-threaded ownership—the Amazon model reimagined for AI.

What Is a 2-Pizza Team?

A 2-Pizza Team is a small, autonomous unit (typically 5-8 people) that can be fed with two pizzas. Coined at Amazon, it represents the optimal size for rapid decision-making and clear accountability. In the AI era, these teams embed AI expertise directly into product development rather than depending on centralized AI groups.

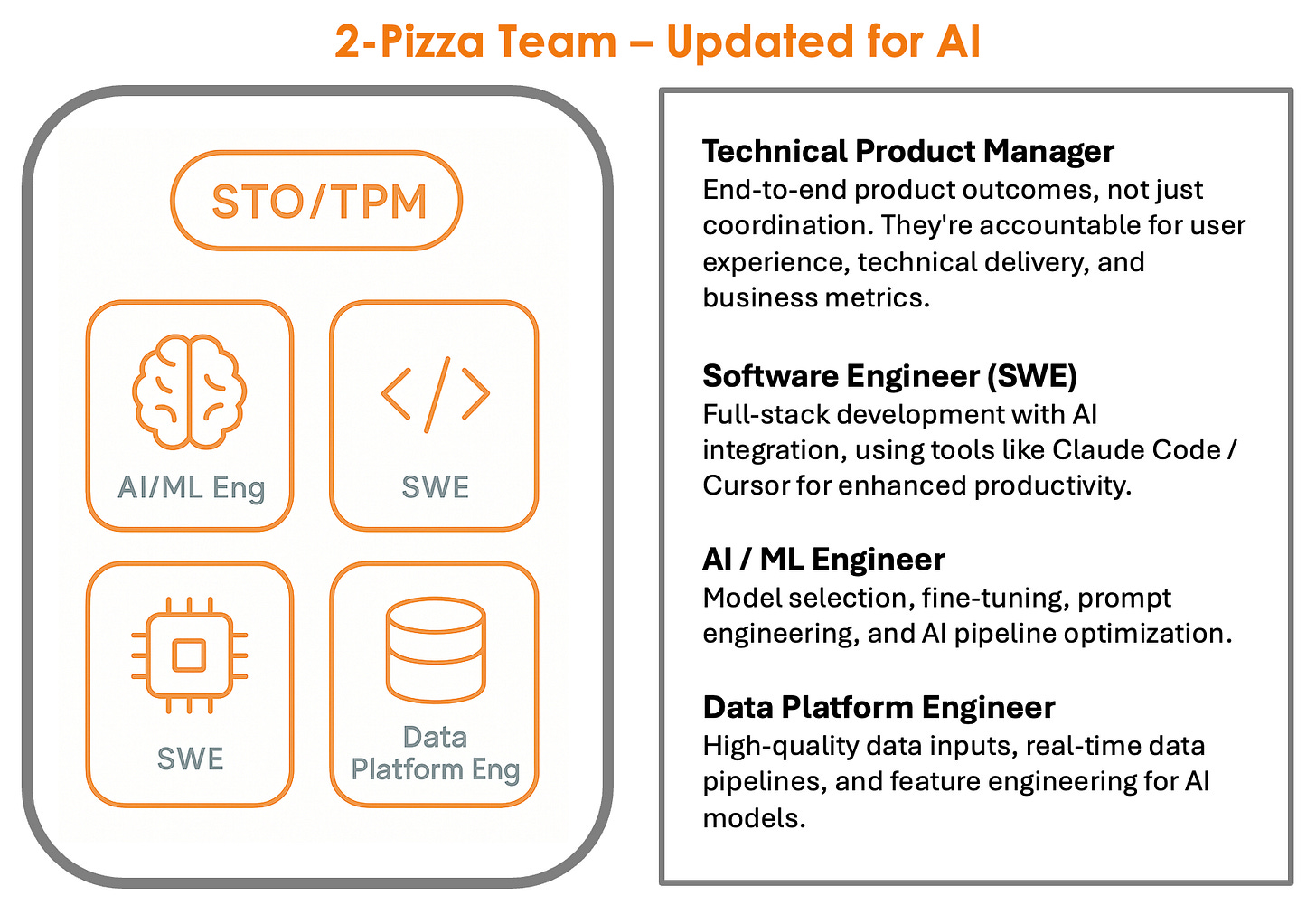

Here's the structure that actually works:

Single Threaded Owner (Technical Product Manager)

What they own: End-to-end product outcomes, not just coordination. They're accountable for user experience, technical delivery, and business metrics.

Key attributes to look for:

Systems thinking: Can connect AI capabilities to user problems

Technical depth: Understands model limitations and can make informed tradeoffs

Business acumen: Owns KPIs and can articulate ROI

Communication skills: Translates between technical teams and business stakeholders

Legacy roles that transition well: Senior Product Managers with technical backgrounds, Technical Program Managers, Solutions Architects who've worked closely with product teams

AI/ML Engineer

What they own: Model selection, fine-tuning, prompt engineering, and AI pipeline optimization.

Key attributes to look for:

Practical AI skills: More focused on shipping than research

Production mindset: Understands reliability, monitoring, and debugging AI systems

Cost awareness: Can balance model performance with infrastructure costs

Integration skills: Can connect models to existing systems and APIs

Legacy roles that transition well: Data Scientists with engineering skills, Backend Engineers with ML experience, DevOps Engineers who've worked with model deployment

Software Engineers (1-2 people)

What they own: Full-stack development with AI integration, using tools like Claude Code/Cursor for enhanced productivity.

Key attributes to look for:

AI-augmented development: Comfortable with AI coding assistants

API-first thinking: Can design clean interfaces between AI and traditional systems

User experience focus: Understands how AI affects product flows

Debugging skills: Can troubleshoot issues that span AI and traditional code

Legacy roles that transition well: Full-Stack Engineers, Frontend Engineers with backend experience, Backend Engineers with UI skills

Data Platform Engineer

What they own: High-quality data inputs, real-time data pipelines, and feature engineering for AI models.

Key attributes to look for:

AI-native data skills: Understands vector databases, embeddings, and streaming data for AI

Quality obsession: Builds systems that detect and prevent data quality issues

Real-time expertise: Can handle microsecond latency requirements for AI inference

MLOps knowledge: Understands model retraining pipelines and data versioning

Legacy roles that transition well: Data Engineers with streaming experience, Backend Engineers with data pipeline skills, Site Reliability Engineers with data platform experience

Implementation Example: Building Customer UI Applications

Instead of the typical enterprise focus, let me walk you through how a company would build a common customer-facing application—say, an intelligent product recommendation system for e-commerce.

Traditional Approach (6+ months):

Business team defines requirements

AI team builds models in isolation

Engineering team builds UI

Integration nightmare begins

Months of back-and-forth debugging

2-Pizza Team Approach (6-8 weeks):

Week 1-2: The Technical PM and AI Engineer work with customer data to understand purchase patterns while the Software Engineer prototypes the UI using Lovable for rapid development.

Week 3-4: Data Platform Engineer ensures clean, real-time product catalog data flows while the AI Engineer fine-tunes recommendation algorithms based on actual user interactions from the prototype.

Week 5-6: Full integration testing with n8n handling workflow automation between systems, and the entire team iterating on both model performance and user experience simultaneously.

Week 7-8: Production deployment with monitoring, A/B testing, and continuous model improvement pipelines.

The key insight: When everyone on the team understands both the AI capability AND the user problem, iteration cycles compress dramatically.

Pros and Cons of the 2-Pizza AI Team Approach

Pros:

Rapid UI ↔ Model ↔ Data iteration

True ownership of both UX and AI behavior

No waiting on separate AI teams to prioritize requests

Clear accountability for outcomes

Faster learning cycles and product-market fit discovery

Cons:

Higher talent acquisition costs (specialized hybrid roles)

Potential technical drift without strong architectural oversight

Coordination challenges across multiple autonomous units

Risk of duplicated infrastructure work

How to Mitigate the Cons

Technical Drift: Establish weekly forums led by Principal Engineers who set architectural standards across teams. Create shared libraries and Claude-powered code review processes.

Coordination Overhead: Use shared design systems, component libraries, and internal APIs that teams can leverage. Tools like n8n can automate cross-team workflows.

Talent Competition: Create internal mobility programs where engineers can rotate between teams every 12-18 months. Invest in continuous learning with resources like The AI-First Company and LLM Engineer's Handbook.

Infrastructure Duplication: Maintain shared services for authentication, payments, and core AI infrastructure while letting teams own product-specific AI features. Consider RunPod for shared GPU resources across teams.

Implementation Advice: Start Small, Prove Value

Phase 1 (Months 1-3): Pilot with 1-3 teams working on distinct product areas. Choose teams with clear success metrics and supportive leaders.

Phase 2 (Months 4-6): Document what works, refine the model, and train more leaders on the approach. Measure velocity and quality improvements.

Phase 3 (Months 7+): Expand to more teams based on proven results. Create internal playbooks and hiring guides.

Tip: Ask your team to give this 100% and try to make it successful. Commit to monthly retrospectives to enable course-correction. Guarantee a retrospective after 3 months (schedule it now) where the team can weigh in on whether this approach continues.

Cost Reality Check:

AI Platform Engineers: $150K-$240K

AI Product Managers: $180K-$280K + equity

Data Platform Engineers: $160K-$250K

Total team cost: $700K-$1.1M annually (vs $2-5M for centralized AI initiatives that often fail to reach production)

The Tough Questions You Need to Answer

Who among your current leaders can own a product with full P&L, data, and AI accountability today? If the answer is "no one," you need to re-architect your org before adding AI.

Are you optimizing for coordination or velocity? Centralized AI teams optimize for coordination. 2-Pizza Teams optimize for shipping.

Can you measure AI success at the product level? If you can't tie AI improvements to user outcomes, your org structure won't save you.

What's your biggest bottleneck: talent, data, or decision-making? Be honest—the 2-Pizza model only works if decision-making is your bottleneck.

Your AI strategy IS your org strategy. The companies that figure this out first will have an insurmountable advantage.

What's your biggest organizational challenge in AI adoption? Comment with your current structure—or forward this to someone who's redesigning their team for the AI era.

P.S. If you're building your own AI-first business, check out BlackLine—our family-run business that's using these exact organizational principles to compete with much larger apparel brands. Sometimes the best way to understand strategy is to see it in action.

Want to dive deeper into AI engineering? Grab Generative AI with Python and PyTorch and start building. Or if you prefer reading on the go, the Kindle Fire HD 10 makes technical books much more portable.